11 Efficient Log Management Best Practices to Know in 2026

Written by

John MartinezLast updated on:

June 25, 2025Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

Summary: In this article, we will spotlight 11 log management best practices you should know to build efficient logging and monitoring programs. You’ll learn how to establish policies and take a proactive approach to collecting, analyzing, and storing business-critical log data. By the end of this article, you’ll have a clearer understanding of how logs can help security teams detect suspicious activity, address system performance issues, identify trends and opportunities, improve regulatory compliance, and mitigate cyberattacks.

1. Formulate a Strategy and Establish Policies

A sound strategy and clear policies are foundational to every organization’s security logging best practices. Consider logging mechanisms and tools, data hosting and storage, compliance requirements, analytic requirements, and other operational needs relevant to logging. Be sure to establish policies for critical activities, including log review, log retention, archiving and purging, redundant data storage, monitoring, security incident response, and so on.

Logs should be constantly examined for unusual activity, potential threats, trends, and any issues that could adversely affect system security or performance. This analysis should be supplemented with alerting—to immediately flag security issues using automation—and proactive remediation. Log retention policies vary depending on compliance regulations and other factors, such as storage capacity. Simulation and rehearsal policies help prepare response teams to address cyberattacks, data loss, system failures, and other security-related incidents.

2. Identify What Needs to Be Logged and Monitored

Decide what activities need to be logged and what level of monitoring each activity requires. Examples of commonly logged data include transactions that must meet compliance standards, authentication events (e.g., successful and failed login attempts and password changes), database queries, and server commands. At a minimum, every log entry should identify the actor, the action performed, the time, and the actor’s geolocation, browser, or code script name.

Log management best practices recommend detaching logs from the devices that create them to thwart malicious actors who target specific systems and devices. To optimize the value of logged data, capture data from different points within the network and from multiple sources, including various devices, servers, applications, and cloud infrastructure. Practice end-to-end logging to gain greater visibility and get a holistic view of the entire system.

3. Understand How Your Logs are Structured

Structured logging can be a best practice, and is often a critical part of every log management strategy. Structured logging ensures log messages are formatted consistently. Separating the components of each message in a standardized way makes it easier for humans to scan through log data quickly. It also improves efficiency, as computers can read, parse, and search structured logs faster than they can process unformatted text. The only caveat is you must be sure you do not lose critical context that may be included in the logs, but is lost when they are put into a schema. The key is to understand the data that is lost when the structure is being applied.

Structured logging practices help with analysis and troubleshooting by giving IT professionals deeper insights and providing input to support creating sophisticated data visualizations. When choosing an enterprise logging solution, consider a tool that uses Key Value Pair (KVP) or JavaScript Object Notation (JSON) log structuring formats, both of which are easy to parse and consolidate.

4. Centralize Logging

Log data comes from many disparate sources, including networks, applications, devices, and various other points throughout the entire IT infrastructure. Before it can be used efficiently, log data must be aggregated in a central location where it can be analyzed and correlated. Correlation requires cross-analysis and metrics to evaluate log data from all sources and discover how an activity or event affects various areas of the system.

Centralized logging best practices recommend routing log data to a location that is separate from the production environment. This enables IT teams to test and debug issues without impacting business-critical systems. It also prevents hackers from deleting log data and mitigates the risk of losing data in an autoscaled environment.

5. Add Context to Log Messages

Log volumes can become quite large, even when organizations diligently apply log management best practices. This can make it challenging to search through long lists of log messages when the need arises. Adding descriptive details to structured log formats simplifies searches and helps with troubleshooting.

Meaningful context can include data such as usernames, IP addresses, timestamps, user identifier codes from client browsers, and other types of unique identifiers associated with user sessions, web pages, eCommerce shopping carts, and so on. Contextual data provides essential details that assist security teams with diagnostics and forensic investigations when problems occur.

6. Use Indexing

Along with structured logging and meaningful context, indexing is among the most valuable security logging best practices. Indexed logs support diagnostics, as they are easier to query, especially when searching huge volumes of data.

Indexing saves time, helping teams find the information they need faster, so they can address issues sooner. Plus, analyzing indexed logs at scale can provide insights into broader trends that can be harder to detect in smaller data sets.

7. Use Scalable Log Storage

Different types of logs have different retention times, depending on the source of the log and any applicable regulatory requirements. For example, troubleshooting logs typically need to be kept only for a few days, whereas certain security logs must be kept for many years. While having access to more data provides more clarity and better insights, many organizations struggle with the challenge of storing massive volumes of data.

Centralized logging solves this problem by leveraging cloud logging best practices. A scalable, cloud-based solution provides reliable storage and can keep up with the needs of a growing organization. It also enables IT teams to search, analyze, monitor, and alert on logged data on the cloud. As an additional bonus, moving logs to the cloud provides an extra layer of security, as hackers would need to compromise two networks instead of just one to gain access.

8. Implement Access Controls

As companies grow and become increasingly complex, their IT teams must expand to meet increasing demands for services and support. Because few team members will need access to every log file, larger teams might consider implementing role-based access controls. Alternatively, organizations can increase log security by granting team members permission to access individual log files.

Access controls also help ensure that only certain team members have permission to perform high-level administrative functions, such as purging and permanently deleting log file data.

9. Implement Real-time Monitoring and Alerts

Enable real-time monitoring for all business-critical environments and applications. IT teams depend on up-to-the-minute data to discover and resolve performance issues, service outages, and other potentially catastrophic problems the moment they arise; and security teams can be alerted to threats or anomalous activity that would otherwise be difficult to identify.

Log-based metrics support efficient logging by summarizing and analyzing log data in real-time to optimize troubleshooting and performance. That means Incident response teams can diagnose and resolve issues faster when security alerts are routed directly to their mobile phones or Slack accounts.

10. Secure Your Log Data

While it might be tempting to log everything, being overzealous can lead to costly data breaches or steep fines for compliance violations. Everything that you log must meet strict security standards, and should always be anonymized or encrypted before it is stored. Cybercriminals often target logs to gain access to sensitive data, such as Personally Identifiable Information (PII), credit card numbers, login credentials, and IP addresses.

11. Assess Usefulness of Logs

Certain types of logs are critical—for example, file creation logs that support forensic investigations, logs of database queries, and logs that record failed authentication attempts. In contrast, log data collected in a test environment typically has little purpose. However, many logs fall somewhere in between on the value spectrum.

Periodically test the utility of logs to evaluate their expediency. Be sure to identify any gaps. The following logs are among the most useful: server/endpoint; VPN/remote access; IDP/ISP, antivirus, and other security tools; and cloud SaaS and IaaS.

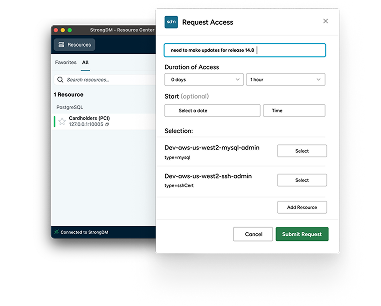

Get Enhanced Security Logging with StrongDM

StrongDM logs critical events that happen in your infrastructure, including who did what, when, and where. These can then be replayed with pixel-perfect fidelity, or sent to a logging tool for further analysis. In addition, StrongDM uses JSON log structuring formats, which are easy to parse and consolidate.

With StrongDM, you get a complete set of tools for managing privileged access to your entire IT infrastructure, including logs that capture every activity and query. Additionally, StrongDM makes it simple to audit access permissions at any time.

Want to learn how StrongDM can help your organization optimize log management and monitoring? Book a demo today.

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

Categories:

About the Author

John Martinez, Technical Evangelist, has had a long 30+ year career in systems engineering and architecture, but has spent the last 13+ years working on the Cloud, and specifically, Cloud Security. He's currently the Technical Evangelist at StrongDM, taking the message of Zero Trust Privileged Access Management (PAM) to the world. As a practitioner, he architected and created cloud automation, DevOps, and security and compliance solutions at Netflix and Adobe. He worked closely with customers at Evident.io, where he was telling the world about how cloud security should be done at conferences, meetups and customer sessions. Before coming to StrongDM, he lead an innovations and solutions team at Palo Alto Networks, working across many of the company's security products.

You May Also Like