NSA Zero Trust Maturity Guidance Explained (TL;DR Version)

Written by

John MartinezLast updated on:

March 27, 2025Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

StrongDM is pleased to see that, in April 2024, the National Security Agency of the United States, has released a Cybersecurity Information (CSI) sheet that recommends why and how organizations, public and private, should adopt the Zero Trust (ZT) security model for their data tier of infrastructure. At the core of the recommendations, an organization needs to know what data it possesses, how that data is being accessed, and how to control access to that data.

While the paper emphasizes the security at the data tier, the tacit recommendations made in the paper indicate that the NSA advocates for a Zero Trust approach across the entirety of enterprise environments. The paper was widely read here at StrongDM, and we were happy to see this level of commitment to Zero Trust coming from such an important organization. While not putting words into the mouths of its authors, it is clear they see the need for comprehensive, complete, and continuous Zero Trust principles to be applied for all enterprise security elements.

Spoiler alert: Security risk is not limited to data

While the threats to web applications and the databases that serve those applications are different, there is a direct connection between the two. In fact, the OWASP Top 10 list puts Broken Access Control as the number one critical security risk to web applications. 94% of applications were tested and had an incidence rate of 3.81%. This resulted from things like over-privileged users, roles, and capabilities; bypassing access control checks; and elevation of privilege where none should exist.

It is clear that data access is fertile ground for attacks, but how can you separate data access from any other type of access? And for that matter, can you effectively address security vulnerabilities if you only look at access and not the resulting actions that occur ex post facto?

Without naming names, the NSA paper corroborates what the OWASP Top 10 list shows: in 2017, one of the major credit reporting agencies in the US had a massive data breach that resulted in the theft of sensitive personally identifiable information (PII) of 148 million people. This breach was the result of exploiting a vulnerable Apache Struts web application server, and cascaded into breaching of underlying data tiers via lateral movement and stealing of credentials. So, a breach occurred, of that there is no doubt. But the resulting damage was exponentially greater because of activity that occurred after access was granted.

We want to make clear that these things – access and actions – and where they occur – databases, applications, Kubernetes clusters – are all intertwined in every modern infrastructure. So, we’ll highlight not just the access control recommendations in the NSA document, but also how StrongDM’s real time, Zero Trust continuous authorization platform can help enterprise security, compliance, and operations teams today.

Background

Before digging into the summary of the paper, boiling down what the Zero Trust Security model is, seems appropriate:

Never Trust, Always Verify

Assume Breach

In the Zero Trust model, every connection to every system must be explicitly authenticated and authorized, regardless of the location on the network. The principle of least privilege (PoLP) is key to reducing the attack surface. We also assume that security threats exist both outside and inside of the network, and that those threads have either already breached or will imminently breach your network and resources.

This forces security, and adjacent teams, to implement continuous verification of credentials, security visibility into all of the resources, granular access control permissions to those resources, and strict policy enforcement of those controls.

StrongDM specifically addresses the Zero Trust Security model with our Dynamic Privileged Access Management architecture. We provide our customers with the platform to implement continuous authorization of users needing to access resources with sensitive data in real time.

The Data Pillar and its Capabilities

Before we get into the capabilities of the Data Pillar, we should note all seven of the pillars of ZT, which are outlined in the DoD Zero Trust Reference Architecture:

The Data Pillar is the core of the ZT Reference Architecture for good reason, as the data breach referenced above shows, that’s where every organization’s most valuable assets are stored.

The NSA paper summarizes the core of the data pillar this way:

Secure data so it is accessed exclusively by authorized users

This paper focuses on seven core capabilities into a maturity model:

- Data catalog risk alignment

- Enterprise data governance

- Data labeling and tagging

- Data monitoring and sensing

- Data encryption and rights management

- Data loss prevention

- Data access control

We won’t be going through every capability, but will highlight the areas where StrongDM has deep alignment within our core product functionality.

StrongDM Applies Zero Trust Continuously

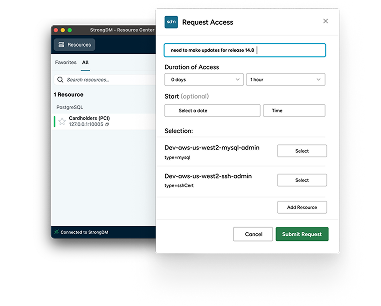

StrongDM’s core product capabilities helps with all maturity levels of the Data access controls capability, with the majority of the controls addressed by core product functionality and StrongDM’s Policy Engine, which implements Policy-Based Access Control (PBAC) with granular access permissions and continuous contextual security evaluation and authorization of a user’s session.

The following table shows the mappings in the Data Pillar maturity model that relate to StrongDM product functionality and how we help:

| Capability | Maturity Level | Control | How StrongDM Helps |

| Data catalog risk alignment | Preparation | Data ownership is identified | Once owners are identified, roles with users can be created to be used in Dynamic Access Rules to allow access, and Strong Policies can be authored to include those principals to further refine fine-grained permissions. |

| Intermediate | Data usage patterns are established | Data usage patterns can be used to build identity actions performed on databases, and include those actions in Strong Policies to refine fine-grained permissions | |

| Advanced | Data is known according to risk levels | Identifying data that is at higher risk or sensitivity can be used to create strong Policies with triggers such as approval workflows and MFA to require additional security friction | |

| Enterprise data governance | Intermediate | Organization establishes just-in-time and just-enough data access control policies | Access Workflows, along with Dynamic Access Rules, and Strong Policy Engine triggers, enables a strong solution for just-in-time and just-enough access, along with authorization triggers for critical operations |

| Advanced | Rules and access controls are automated through central policy management | Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context | |

| Data labeling and tagging maturity | Intermediate | Machine enforceable data access controls are implemented | Access Workflows, along with Dynamic Access Rules, and Strong Policy Engine triggers, enables a strong solution for just-in-time and just-enough access, along with authorization triggers for critical operations |

| Data access controls | Preparation | Organizational policy is developed with enterprise-wide central management solutions in mind. | Organizational policies can be coded into access policies, and Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context |

| Ensure appropriate access to, and use of, data based on the data and user/NPE/device properties. | Access Workflows, along with Dynamic Access Rules, and Strong Policy Engine triggers, enables a strong solution for just-in-time and just-enough access, along with authorization triggers for critical operations | ||

| A software defined storage (SDS) policy and an enterprise Identity Provider (IdP) integration plan are developed. | StrongDM integrates with your IdP's identity lifecycle capabilities and onboarding / offboarding via SCIM; Roles are used in conjunction with Access Workflows and Dynamic Access Rules | ||

| Basic | Policy Based Access Controls (PBAC) are established. PBACs inform data access decisions using attributes determined by policy rules. | Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context | |

| Intermediate | Attribute Based Access Controls (ABAC) are defined and established, ensuring identity attributes correspond to appropriate data objects. | Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context | |

| Roles are defined and implemented ensuring access to data dependent on proper user roles within the organization. | StrongDM integrates with your IdP's identity lifecycle capabilities and onboarding / offboarding via SCIM; Roles are used in conjunction with Access Workflows and Dynamic Access Rules | ||

| Advanced | Individual and policy based access controls are established and automated central management solutions are fully integrated to manage changes from the central controller. | Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context | |

| ABAC, RBAC, and PBAC controls are further refined to provide more granular access regulations. | Strong Policy Engine enables centralized policy management and enforcement for fine-grained access control with continuous security context |

Achieving Zero Trust With Strong Policy Engine

One of the key benefits of the Strong Policy Engine is the use of a powerful and granular policy language that enforces policies in near real-time, based on the Cedar Policy Language.

Strong Policy Engine implements the PARC model, which has four parts:

- Principal: Users, groups, roles

- Action: The valid operations defined for resources

- Resource: The object being operated on, in StrongDM’s case, an infrastructure resource

- Context: Additional attributes that can be used to evaluate during the policy evaluation

Additionally, Strong Policy Engine can trigger requests for further evaluation and authorization, such as asking for an MFA code, or go through an additional approval workflow, implementing advanced maturity controls in the ZT Data pillar. The diagram below maps policy constructs to the ZT Pillars at a high level:

As stated earlier, StrongDM specifically addresses the Zero Trust Security model with our Dynamic Privileged Access Management architecture and the Strong Policy Engine.

We call this Zero Trust PAM, and StrongDM is the only access solution that takes this approach (and achieves the outcomes intended from rigorous ZT standards).

🕵 Learn how Better.com uses StrongDM to adopt Zero Trust access.

If you would like to see the only platform to implement continuous authorization of users needing to access resources with sensitive data in real time, schedule a demo today!

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

Categories:

About the Author

John Martinez, Technical Evangelist, has had a long 30+ year career in systems engineering and architecture, but has spent the last 13+ years working on the Cloud, and specifically, Cloud Security. He's currently the Technical Evangelist at StrongDM, taking the message of Zero Trust Privileged Access Management (PAM) to the world. As a practitioner, he architected and created cloud automation, DevOps, and security and compliance solutions at Netflix and Adobe. He worked closely with customers at Evident.io, where he was telling the world about how cloud security should be done at conferences, meetups and customer sessions. Before coming to StrongDM, he lead an innovations and solutions team at Palo Alto Networks, working across many of the company's security products.

You May Also Like